Once the talk was brainstormed [have I mentioned how much I HATE MSWord - used an organisation chart, and swore at it all morning while it did almost but not entirely exactly opposite to what I wanted - you get what you payed for] I then set about creating life ... quick Igor, throw the switch and raise the platform...

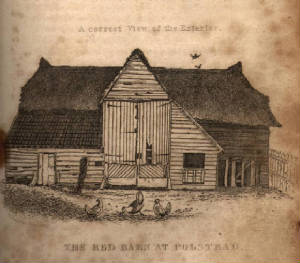

MUD first: In overview, I have to create a monster [settle Igor], customise it's names, flag it as a woman, undead, a talker, give it a description and an initial talk string, some RPG char stats and then save it with a numeric index [that I prepared earlier]. I then teleported to the room the monster will live in [a good old shallow grave - see previous posting], popped a copy of that indexed monster, permed it into the room, saved the room and Bob's your Aunty we have a talking monster ... not quite. Although the monster is flagged as a talker, I have to deposit a talk file into a special folder on the server that contains the keyword and responses separated by hard returns [but that is UNIX, right, you know this ... lol, my FAVOURITE line from the Jurassic Park movie where a shiny teen sits at a pooter, turns it on with aggro velociraptors opening the doors beside her and up pops a bewildering graphical mess and she says ...."this is UNIX, I know this" - laugh, I tell you], and then we have a live talker - "It's ALIVE muhahahaha!". The script I used to do this is here: MUD Maria Marten Script

Now to MOO: In overview, I create an instance of a generic bot, named "Ghost of Maria Marten", travel to the room she will live in [again shallow grave], drop her there [when you make something other than a room in MOO, it is automatically in your inventory], then LOCK it into the room [this saves some well meaning thieving mongrel coming in and picking it up and carting it away with them ... yes, it happens], then remove the already defined vocabulary [a generic bot is a turing conversation engine, with keywords, random responses and question responses and grammar patters able to be defined via command line]. then I add each of the keywords, followed by their responses [and I realise that the responses are not as rich as I normally make a MOObot respond, usually I apply 2 or 3 responses for each keyword - might revisit that - that way two people can converse with the bot and get different responses each time], then I ACTIVATE the bot and ... "It's ALIVE muhahahaha!" - this was all done at command line in the MOO because I can, and because some of it has to be commands, some of the process can be mouse-driven however. The script I used to achieve all this is here: MOO Maria Marten Script

And finally to SecondLife: In overview, I find a comfy chair, beside a pool and entertaining show to watch while I drag my camera to where I want the character to be [actually walking or flying there is just so yesterday], create an object, shape and texture it to look like a human - actually, no, i lie - I made a cube primi and will worry about something that looks vaguely human later. Then I r-click, edit, contents and add a SCRIPT object to this primi containing a variant of a script originally written for me by Azwaldo [see previous postings - love your work mate] with all the appropriate keywords and keyword decisions with response actions edited syntactically into the object, select a channel for that critter to chatter on, touch the object and - "I'ts ALIVE muhahahahaha" - all done with point and click except the scripty thing which is textual. The script I embedded in the Maria Marten object is here: SecondLife Maria Marten Script

And what did I learn from this - apart from "it's a hell of a way to EAT a rainy day and some bandwidth" - remarkably, the character creation process in all three environments is very similar conceptually. Sure the syntax and convention differs but starting with a plan, I was actually surprised how it was possible to take the MUD version and using good old search-and-replace wrap that stuff up in the code and commands for each of the three environments.

Comparing and contrasting the artificial lifeforms: The SecondLife critter is least lifelike - I have not got any animations yet, it does not yet even vaguely resemble a person but is a functional container for dialogue-based content. The MOO bot is cleverest in it's conversational construction - when I add randoms and question responses also you will be able to talk to it as you would another player in MOO, and it will get clever in sentence construction remembering what was said previously - when I add the animated graphics for icon and multi-media components of the bot it should be quite satisfying to deal with. The MUD talker is by far the most configurable, and when I talk to her, my mind seems to fill in the gaps - I find myself empathising with her plight and I know that is odd, as she is a bunch of words in an arcane interface and not even any colour ... but that is my brain, not yours. This whole theory of "multiple intelligences" suggests we all have preferred interaction and learning styles, so I should not be freaked out that a bunch of words speak to me at my level, just as you should not feel guilty for preferring to click on something ...

What is next - rinse and repeat, and then some artefacts to add richness in all three environments. Nice to see some progress, say hi to your mum for me. "Igor, we now have enough LIFE for a tennis match - break out the raquets and iced lemonade - tennis anyone?"

No comments:

Post a Comment